NIGEL STANFORD: THE SHAPE OF SOUND

Nigel Stanford is a multi-instrumentalist, composer, and creative artist, with an extensive background in software engineering. It’s a dynamic combination – one that allows for slick inter-disciplinarity between scientific and musical innovation. Indeed, like many who explore the crossover between art and science, Stanford has a flair for identifying ways in which scientific tools or devices might be repurposed, reimagined, or coaxed into producing striking visuals to complement his music. His videos combine sound composition with unusual methods of audio visualisation; incorporating robotic instruments, scientific apparatus, and experimental devices. These inventive works, including Cymatics (2014) and Automatica (2017), have achieved viral status on the web – at the time of writing, Cymatics has been watched over 35 million times. And this popularity is understandable as he really does push boundaries – often feeling as though he’s ‘writing a soundtrack for a sci-fi film that hasn't yet been made’. Indeed, his aesthetic universe seems to have few conceptual limitations; intergalactic travels are possible, robots behave as humans might, and inert matter animates. This broad and experimental canvas allows Stanford to fulfil his central artistic aim: ‘to … reinforce the music in a visual way.’ But, his process cuts both ways – the concepts, images, and apparatus employed also feed into his musical compositions, as he explains: ‘what [is] physically possible in terms of the experiments and visuals, [sets] the rules around what the music [can] do.’ We spoke to the innovator about his creative process, the science behind those viral hits, and his most recent project UltraWave.

Cymatics – Visualising Resonance

A recurring theme in Stanford’s work is visible sound. Baroque composers might have described the arrival of the spring by introducing some high-pitched flute notes as sonic signifiers and more recent music, like Pink Floyd’s ‘Animals,’ Biomusic, or Brian Eno’s ‘Bloom’ app have similarly used sounds to better describe images – to allow our minds to envisage the musicians’ dreams and visions. In his video for Cymatics, Stanford reinforces this capacity for audio-visual interchange still further. For example, low frequencies of the bass, visible through large waveforms in ferrofluid on speakers, emphasise a sensation of visceral fluidity for the listener – a feeling of physical expansion out from one’s core – mirroring those visual markers of concentric wave expansion across the ferrofluid surface. Thus, the audience is not only able to see and hear those physical properties he is exploring conceptually, those watching and/or listening are even able to feel them.

Ferrofluid is a curious substance, comprising suspensions of magnetic particles within a liquid, and it has various useful properties; attraction to magnetic fields, the ability to conduct and absorb thermal energy, and the ability to deform (Scherer and Figueiredo Neto, 2005). Interestingly, the commercial applications of ferrofluids are diverse, and include a number of functions within the construction of loudspeakers – providing effective heat dissipation (where the ferrofluid efficiently conducts heat away from components), and as dampers, attenuating unwanted vibrations (ibid., Raj and Moskowitz, 1990).

For Stanford, audio-visual interchange is a conscious aim and can pose compositional challenges. When creating Cymatics, ‘the sand of the Chladni plate took a second to form its shape,’ which meant that he ‘needed to write a musical riff that held on to the same note long enough for the shapes to form.’ In addition, to create visual clarity, the sand ‘required single notes, not chords,’ to be part of the composition. For this reason, his ‘planning of the visuals and the music are very intertwined and take place at a similar time.’

The plate itself is an apparatus invented by Ernst Chladni, a German physicist and musician who is sometimes referred to as ‘the father of acoustics’ (Latifi, Wijaya, and Zhou, 2019). As the thin metal plate vibrates, it displaces particles resting on the surface, producing symmetrical patterns which shift in response to the changing input. The plate is bowed until it reaches sonority and vibration on the still surface causes the sand to move and concentrate along the nodal lines – those shapes taking different forms according to the way the bow is played, the angle, and the shape of the plate itself. In Cymatics, we witness a more modern version; a keyboard connected to the plate via a midi controller and, by changing the note and the mode on the oscillator, the sand creates different shapes.

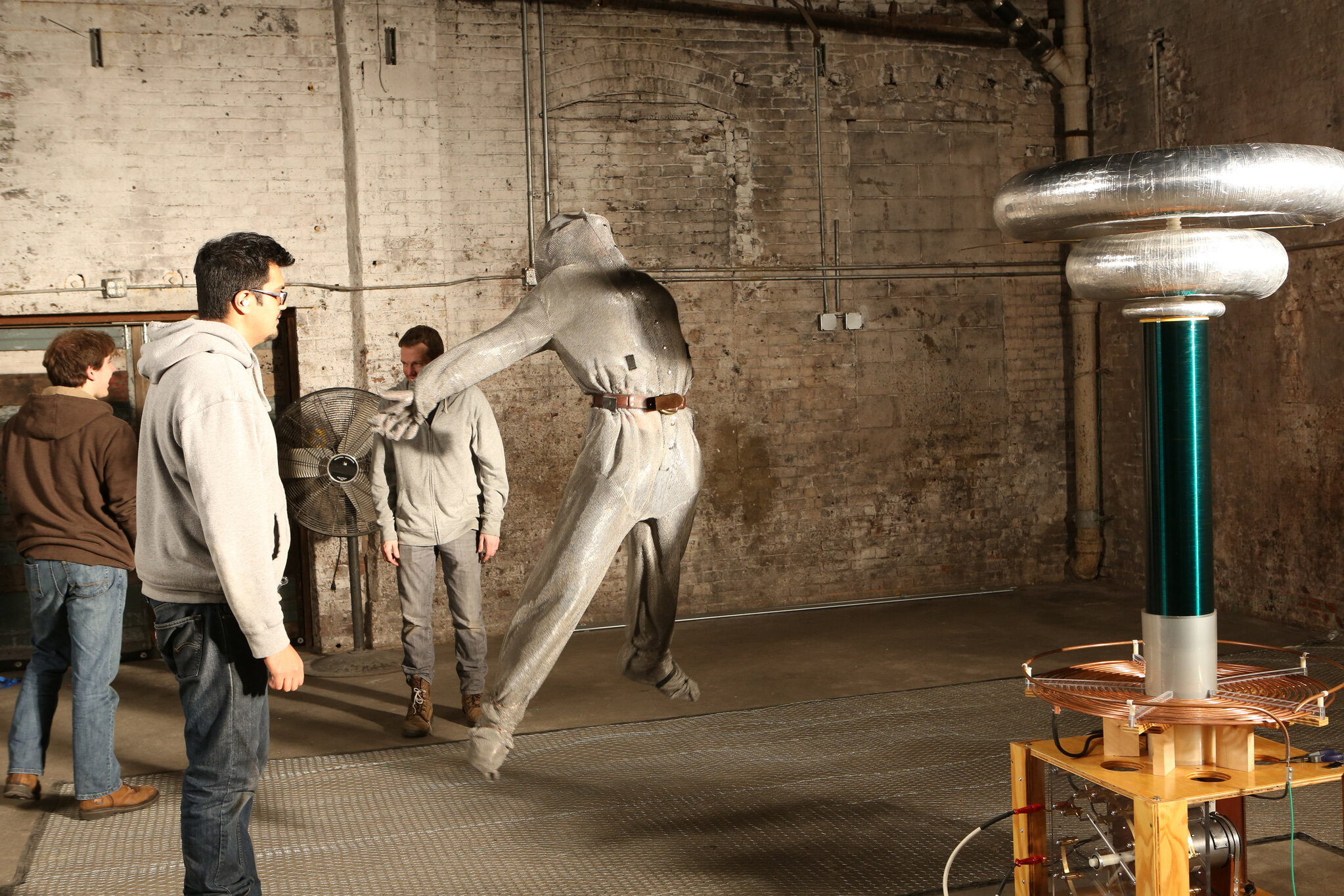

In addition to the ferrofluid and Chladni Plate, the video also incorporates other visual representations of sound. One of these methods of representation employs a Tesla Coil: a type of electrical ‘transformer’ which converts alternating current electricity to a very high voltage and low current (Cheney, 2011). Since its invention by Nikolai Tesla in 1891, the Coil has found many applications – transmitting and receiving radio signals (Roguin, 2004), in a variety of experimental medical treatments (Graves, 2018), and as a possible method of wireless power transfer (Aziz et al., 2016).

The ability of a Tesla Coil to discharge long, loud sparks lends itself quite naturally to performance (Sheldon et al., 2000), as can be seen in the concluding minute of Cymatics. Stanford explains that although the Tesla Coil has been used in performance before, he was keen to innovate. ‘There are two things we did which I had never seen before,’ he explained. ‘One was a moment where we shot the Rubens’ Tube with lightning from a Tesla Coil. The Fire Marshall on set told us it would be safe to do, and so we gave it a try - even though we were nervous.’ The other feat involved personal risk. Unafraid to experiment on set, the musician describes how ‘the final shot in the video, where I jumped and the electricity entered my head and exited my feet onto a wire mesh,’ was devised on set. ‘The theory was that it would form a circuit, but we didn't know if it would actually work until we tried it.’

Another invention that makes an appearance in Cymatics is the ‘Rubens’ tube’ – a rudimentary oscilloscope which demonstrates the relationship between standing waves in a tube and acoustic pressure (Gardner et al., 2009). Consisting of a closed tube filled with gas and connected to a source of sound, as the tube resonates, the flame height corresponds to the pressure amplitude inside the tube (Gardener et al., 2009). This visually exciting representation of sound waves has been used in educational demonstrations for over a hundred years (Gee, 2009), and has found considerable success as an outreach tool (e.g. Vongsawad et al., 2016; Mount, 2019). While the original demonstrations by the German physicists Rubens and Krigar-Menzel used a tuning fork, the creators of Cymatics have used loud speakers and midi to create standing waves.

Automatica – Robots vs Music

For his 2017 album Automatica, Stanford particularly wanted ‘to explore the concepts of robotics, singularity, and artificial intelligence.’ He also ‘thought it would be cool to see a robot explode a piano.’ In a way, this exemplifies his approach to creation – whilst often both technically and philosophically interesting, his work is also driven by the pursuit of spectacle.

In creating the video for the album’s title track, Stanford and his team collaborated with KUKA, a German manufacturer of robotic systems for industry and biomedical sciences. For this project, they specifically enlisted KUKA Robotics and KUKA partner Andy Flessas, also known as AndyRobot, a robotics expert who has previously worked with artists such as Bon Jovi, Lady Gaga, and Deadmau5. While KUKA’s inventions can often be found building cars or welding metal, their robotic peripherals can also be programmed to perform a wide variety of movements – and, in this instance, the KR10 Agilus model appears in the video as a musical collaborator. According to the manufacturer themselves, ‘using robots in creative applications is rapidly expanding,’ and ‘making more space for human creativity is one major benefit of automation’ (Beaupre, 2017). Again, the technology used in Automatica informed the specifics of the musical composition for Stanford: ‘the writing process involved figuring out what kinds of moves the robots could do,’ and it was necessary to devise ‘parts that fit within those limitations.’

Exploring the limits of the robotic arms was a process of discovery. To retain visual simplicity, Stanford was keen that the robots’ limbs should retain their usual configuration and ‘didn’t want the robots to have multiple fingers.’ He discovered that programming the robots to play some of the instruments proved more challenging than others. Those with keyboards, the piano and synth, were comparatively simple as the distances between keys are consistent, whereas the bass and DJ instruments required the most precise touch and pressure from the robots, so as not to destroy the strings or turntables – even with their 0.03mm accuracy, this was more tricky.

The video concludes with a spectacular transition from musical collaboration with the robots their widespread destruction of the instruments and set. We asked Stanford if he felt nervous during the destructive part of the film shoot, but his concern seems to have been limited to the earlier stages: ‘programming the robots was a little nerve-wracking because if you made a mistake in programming, you could find yourself seriously hurt.’ The programming carried on for four weeks before filming and took place in Stanford’s garage, using 3D software called Maya and a plug-in called Robot Animator.

In addition to the programming development, there were interesting filming challenges too. The idea was to make it look as though there were sixteen robots, when there were actually just three. To achieve this effect, the team used a combination of a motion controlled camera on a track, robots positioned in the background and foreground of the same set up (shot more than once), and lengthy post-production work. The final shot took roughly eight passes and shows all sixteen robots.

UltraWave

So, what’s next for this wide-ranging musician? Currently, a team consisting of Stanford, two C++ developers, and two web developers, all based in Wellington, New Zealand, are ‘working on a new project – a software synthesiser called UltraWave.’ The aim is to equip musicians with ‘a new form of synthesis which will allow [them] to make sounds that can't be made in any other way.’ The synthesiser is being written in C++ using the JUCE framework and will be available as a plugin in VST and all major formats. According to Stanford, they’re ‘hoping to finish the project early this year.’

To find out about UltraWave and more, please visit https://nigelstanford.com/

References:

Abd Aziz, P.D., Abd Razak, A.L., Bakar, M.I.A. and Aziz, N.A., 2016, October. A study on wireless power transfer using Tesla coil technique. In 2016 International Conference on Sustainable Energy Engineering and Application (ICSEEA) (pp. 34-40). IEEE.

Bamford, C.H., Jenkins, A.D. and Ward, J.C., 1960. The tesla-coil method for producing free radicals from solids. Nature, 186(4726), pp.712-713.

Beupre, M., KUKA Interview, 2017. https://www.kuka.com/en-gb/press/news/2017/09/nigel-stanford-automatica

Chladni, E., Treatise on Acoustics, 2015. 10.1007/978-3-319-20361-4_10, Springer International Publishing: Switzerland

Cheney, M, Tesla: Man Out of Time. 2011, Simon and Schuster. p. 87.

Gardner, M.D., Gee, K.L. and Dix, G., 2009. An investigation of Rubens flame tube resonances. The Journal of the Acoustical Society of America, 125(3), pp.1285-1292.

Gee, K.L., 2009, October. The Rubens tube. In Proceedings of Meetings on Acoustics 158ASA (Vol. 8, No. 1, p. 025003). Acoustical Society of America.

Graves, D.B., 2018. Lessons from tesla for plasma medicine. IEEE Transactions on Radiation and Plasma Medical Sciences, 2(6), pp.594-607.

Kourosh Latifi, Harri Wijaya, Quan Zhou. Motion of Heavy Particles on a Submerged Chladni Plate. Physical Review Letters, 2019; 122 (18) DOI: 10.1103/PhysRevLett.122.184301, p.1

Mount, A. G. (2019). Visualizing Music Theory with a Rubens Tube. Retrieved from http://purl.flvc.org/fsu/fd/FSU_libsubv1_scholarship_submission_1575661938_ef3add79

Raj, K. and Moskowitz, R., 1990. Commercial applications of ferrofluids. Journal of Magnetism and Magnetic Materials, 85(1-3), pp.233-245.

Roguin, A., 2004. Nikola Tesla: The man behind the magnetic field unit. Journal of Magnetic Resonance Imaging: An Official Journal of the International Society for Magnetic Resonance in Medicine, 19(3), pp.369-374.

Scherer, C. and Figueiredo Neto, A.M., 2005. Ferrofluids: properties and applications. Brazilian journal of physics, 35(3A), pp.718-727.

https://www.scielo.br/scielo.php?pid=S0103-97332005000400018&script=sci_arttext

Skeldon, KD, Grant, AI , MacLellan, G., McArthur, C. (2000) Development of a portable Tesla coil apparatus. European Journal of Physics, Volume 21, Number 2, p125

Vongsawad, C.T., Berardi, M.L., Neilsen, T.B., Gee, K.L., Whiting, J.K. and Lawler, M.J., 2016. Acoustics for the deaf: Can you see me now?. The Physics Teacher, 54(6), pp.369-371.

All images and videos shown courtesy of © 2021 Nigel Stanford